In my 5th article on AI, I take a detailed look into the dry but critical aspects that pose the greatest risks if companies, governments and various bad actors are allowed to exploit AI. We are all aware of the need for guardrails, but how do we define and apply the rules in such a fast moving space?

Unprecedented times

We live in a world recently festooned with AI hyperbole – with much of it highly likely to happen at some point in the future. Parallels have been drawn with the dotcom boom and subsequent existential doom. As a pragmatist, I sit somewhere on the positive side but with heavy caveats. However, I’m not on my own when I say that this tech revolution is far bigger than dotcom. AI is more broad ranging and over time will have an unprecedented impact, good or bad, over almost everything we do.

In the past year I’ve closely followed what has become a daily deluge of updates and breakthrough announcements. However, when I think back, very few of the headlines were about the progress made in the less sexy areas such as governance, ethics, regulation and bias (which I refer to as GERB).

This is likely to change throughout 2024 as efforts are ramped up to try and control, what many feel, is a dangerously out of control body of unchecked AI development across almost every industry, with unintended or malicious activity where AI is at the heart of things.

Governance

Definition: Governance covers frameworks, policies, and procedures and is used to ensure AI systems are accountable, transparent, and operate within legal and ethical boundaries.

Note: Unlike Regulation, the implementation of governance in AI is primarily an internal facing activity.

Like kids with the keys to a well stocked sweet shop, we have let the whole school loose, and they have not had their breakfast. To extend the analogy, some of the kids are a little more restrained and remember the last time they got sick from too many cola-cubes. Some have older siblings who keep them in check. The rest are ravenous and within minutes are pirouetting in a sugar induced daze, with destructive effect.

OK, so enough visualising, we can see how this free-for-all has manifested in recent news stories:

- Air Canada Chatbot Misinformation: In 2022, Air Canada faced a lawsuit after its AI-powered chatbot provided customers with false information about travel discounts for bereaved families. The lack of internal oversight and clear guidelines for the chatbot’s responses led to the company being held partially responsible for the misinformation

- Facebook AI Hiring Bias: An internal investigation at Facebook revealed in 2022, its AI-powered recruitment tool discriminated against female and minority candidates. The lack of proper testing and ethical considerations during the development and implementation of the AI system resulted in biassed outcomes

2024’s US presidential election and the UK government elections have brought GERB into sharp focus. The risk of ‘bad-actors‘ and the harm it could do on these events has been brought into sharp focus with the painful memories of Cambridge Analytica serving as a reminder of the potential harm AI can bring.

Automation being an inherent feature of AI significantly lowers the bar for application creation, multiplying the level of risk and vastly expanding the cost and effort required to fight against deep fakes, voice manipulation, coercion through data misuse etc.

There is no question that the following examples have been created with the most positive intent, and have been held up as prime examples of AI development supremacy, but in the wrong hands, this kind of tech could cause major global issues.

11 labs can take text or existing video content and convert it into multiple languages including the AI to create lip sync according to the chosen language.

Sora text-to-video capability has astounded some and horrified others with its ability to create astounding 4K rendered video from a single prompt. Use is restricted to carefully curated Red Team members for now, but this development is possibly the most jaw dropping AI step change so far, with many claiming its modelling architecture is a precursor for achieving Artificial General Intelligence (AGI).

Only 6-months ago would Sora have been considered by many experts, decades away from being a reality.

In late January 2024, a robocall circulated voters in the New Hampshire Democratic presidential primary. The call used a very convincing AI generated voice of President Biden recommending people not to vote and save their vote for later in the year “when it really mattered”. Since then, Steve Kramer, a political consultant, admitted to commissioning the AI-generated robocall, but, In reality the damage has already been done.

Given the potential for harm through highly convincing robocalls, these have subsequently been banned. Don’t expect this to be the last AI activity receiving this swift, punitive measures. More of this in Regulation, below.

Ethics

Definition: The moral principles guiding AI development and use, ensuring they benefit society and do not harm individuals.

Investment in AI is scaling rapidly, yet the allocation for ethical oversight and regulatory compliance, despite the right noises coming from governments remains disproportionately small. A striking example is, in the UK, the modest £10 million fund dedicated to preparing and upskilling the regulators tasked with covering AI safety – a drop in the ocean compared to the vast sums fuelling AI development. This discrepancy underscores a critical imbalance that must be addressed, although it is never easy to justify investment in an area that’s typically hard to illustrate a tangible and quantitative ROI on spending.

In my third AI article I wrote about the AI effect on employment. There’s a clear ethical perspective here, whereby companies should not use AI as an excuse to reduce working overheads to the detriment of remaining staff, impacting working conditions and increasing their workloads. This can happen not just through redundancy but also by implementation of badly thought out solutions underpinned by AI tech.

Companies often introduce change poorly, and with the clamour to adopt AI, leaders will make mistakes. With the ability of AI to impact every area of businesses, great care must be taken to implement it correctly for the sake of employees and the business itself.

Example topics for ethical consideration in AI include:

- Privacy and Surveillance: Balancing the benefits of AI in public safety and services against individual privacy rights and mass surveillance issues

- Transparency and Explainability: Making AI decision-making processes clear, so users understand how and why decisions affecting them are made.

- Accountability: Establishing clear responsibility for AI-driven actions, particularly when they lead to adverse outcomes

- Equitable Access: Guaranteeing that AI technologies are accessible to all segments of society, preventing digital divides or technology-driven inequality

Data use is also a critical AI ethics component:

- Data Privacy: Ensuring personal data used to train AI systems is collected, stored, and processed in a manner respecting individuals’ privacy rights

- Data Accuracy: Maintaining high standards of data accuracy and integrity to prevent AI from making decisions based on flawed or misleading information

- Data Consent: Obtaining clear, informed consent from individuals before their data is used in AI systems, especially for sensitive or personal information

- Data Security: Protecting data from unauthorised access or breaches to prevent misuse of personal information by AI systems

Regulation

Definition: Refering to externally facing rules that overlap, reinforce and strongly inform corporate governance. This culminates in the legal requirements and standards set by governments to ensure AI systems are developed and used safely, transparently, and without infringing on human rights.

Note: Regulation is more globally external than Governance as the rules and regulations set within tend to be applicable to all corporate entities and individuals who operate within the jurisdiction of the governing body defining the regulations.

The 3 key geographies spearheading governance are the UK, the European Union and the United States.

United Kingdom

The UK’s AI regulatory approach, as detailed in its recent white paper, emphasises flexibility and innovation, diverging from the EU’s more prescriptive AI Act. The UK opts for a principles-based, sector-specific framework, avoiding new overarching regulators and heavy-handed legislation. It focuses on safety, transparency, fairness, accountability, and redress, allowing existing regulators like MHRA and ICO to apply these principles tailored to their domains.

In contrast, the EU’s AI Act outlines strict lifecycle obligations for AI systems, with new penalties and a centralised regulatory structure. The UK’s adaptable model aims to foster growth and maintain public trust, while the EU prioritises comprehensive risk management.

The UK government is keen to recognise the vast potential of AI and the need for proactive regulation to support innovation. Their consultation paper on ‘A pro-innovation approach to AI regulation: government response’ deals with all aspects of AI support and regulation:

Key takeaways:

- Pro-Innovation and Pro-Safety: Aiming to foster innovation while ensuring AI systems are safe and trustworthy

- Cross-Sectoral Principles: A guide for AI development and deployment: transparency, fairness, accountability, safety, and liability

- Regulator Support: With a £100+ million investment to boost AI capabilities within regulatory bodies

- Central Function: A new central body will coordinate regulatory efforts and address any gaps

- International Collaboration: The UK is committed to working with global partners to develop a unified approach to AI governance

- Context-Specific Regulation: Existing regulatory bodies to address AI risks within their sectors aligned with the cross-sectoral principles

- Evolving Approach: To recognise legislation needs as AI technology advances, focusing on accountability for developers of highly capable AI systems

The paper also identified a number of specific actions and Initiatives:

- Publication of AI regulation white paper in March 2023

- Funding for regulator AI capabilities and AI research

- Establishment of an AI Safety Institute

- Hosting global AI Safety Summits

Even though the bigger stories we see will still be reserved for the sexy-tech headlines, under the radar we are rapidly approaching a point where formal governance interventions outlined above will become a reality.

Blanket bans may be applied on certain uses of AI where they are obviously with nefarious intent, or could do unintentional harm when deployed without the use of stringent guardrails. How many of these bans are reactive to occurring incidents remains to be seen, but as we know, reactive solutions are far less effective than proactive strategy.

European Union

The European Artificial Intelligence Act (the AI Act) is a powerful proposed legislation for ethics in AI across the European Union.

At its core, the AI Act classifies systems into four distinct risk categories:

- Unacceptable Risk: Applications that are considered a clear threat to people’s safety, livelihoods, and rights. These applications are subjected to an outright ban. Examples include social scoring systems by governments and exploitation of vulnerabilities of children

- High Risk: Used in critical infrastructures, education, or law enforcement, are subject to stringent regulatory requirements before they can be put on the market. These include obligations related to data and record-keeping, transparency, human oversight, and accuracy

- Limited Risk: such as chatbots, the act mandates transparency. Users should be informed that they are interacting with an AI system, ensuring clarity and preventing deception

- Minimal Risk: encompass most AI systems currently in use, are largely free from regulatory constraints under the AI Act. The legislation assumes that these systems pose little threat and thus do not necessitate stringent controls

This categorisation seeks to ensure AI use respects values and fundamental rights, including privacy, non-discrimination, and consumer protection.

Additionally, the AI Act proposes the creation of European and national databases for high-risk AI systems alongside measures for post-market monitoring to enhance transparency and accountability. Penalties for non-compliance are similar to that introduced by the UK Information Commissioner’s Office (ICO), which can be substantial, including fines of up to 6% of global annual turnover for the most serious infringements.

The EU AI act has additional extraterritorial implications attached to its legislative powers. Non-EU entities that provide AI systems or use AI outputs in the EU will need to follow regulations, extending the act’s reach globally and influencing other nation’s AI standards and practices.

Despite the great potential in the act, it’s still at proposal stage and undergoing due legislative process before becoming law. We can only hope it’s given the support by all stakeholders that it deserves.

United States

The UK hosted, international safety conference held in Bletchley, England in November 2023, attended by President Biden. The Executive Order 14110 on AI that resulted from the event has been a key AI US focus since then.

It’s interesting to note that despite echoing intentions on intent, there are some key differences between the Executive Order 14110 and the EU AI Act:

- Regulatory Approach: The U.S. executive order guides principles and government agency responsibilities without establishing a new legal framework. The EU AI Act proposes specific legal obligations that apply uniformly across all member states

- Risk Classification: The EU explicitly categorises AI applications based on risk, from minimal to unacceptable, with corresponding regulations. The U.S. order does not categorise AI systems by risk but focuses on broad policy goals

- Scope and Detail: The EU AI Act details explicit stipulations for compliance, assessments, and enforcement mechanisms. The U.S. executive order is less prescriptive, focused on setting objectives for federal agencies

- Global Competitiveness and Innovation: While both aim to maintain leadership in AI, the U.S. executive order places a strong emphasis on maintaining national competitiveness and fostering innovation, whereas the EU AI Act focuses more on safeguarding fundamental rights and safety

- Implementation and Enforcement: The U.S. order primarily affects federal agencies and outlines actions for these bodies, without directly imposing new requirements on the private sector. The EU AI Act, however, will directly impact both public and private entities within the EU, enforcing compliance through established legal mechanisms

It’s important to observe these differences as AI solutions are often globally distributed and your product could fall foul of local regulations.

Existential concerns

Although not an actual regulatory measure, the most publicised of any efforts to control AI was the open letter signed by numerous AI leaders in March 2023, including Elon Musk and Sam Altman, who has since become one of the most visible businessmen in the world, in a plea to pause AI development while the visionaries take stock of the risks.

The sentiment of the letter clearly states an existential concern for the future of the human race if AI reaches a superhuman level of development without the necessary checks and balances in place for us humans to remain in control indefinitely.

Given the vested interests of the signatories, though, their actions were met with more than a little suspicion. Current AI risk trajectory is showing that their concerns were justified.

Bias

Bias: Refers to unfair prejudices or favoritisms encoded into AI systems, often due to the data on which they are trained.

There have been a few stories reported that highlight the bias of AI – much to the embarrassment and even financial impact on companies. Google’s Alphabet dropped 4.4% as a direct result of overenthusiastic racial sensitivity when using image search in early 2024.

In a series of highly publicised examples, Google’s Gemini has been returning images that were just completely wrong. Given Google’s early forays into image recognition had produced highly embarrassing results they turned the sensitivity dial to 11 to avoid over representation of white folk. The outcome of which was as embarrassing, albeit marginally less insulting with female and black popes as well as a 19th century wealthy German couple being portrayed as a Chinese woman and a black man.

Reparations though are not easy to engineer into a system that is coded to deliver diversity at every single opportunity, irrespective of historical fact. Fall out which Google and Gemini are currently battling to resolve.

This also shows how perceived AI performance, good or bad, is now a big enough factor for tech companies to affect their value and apply significant pressure on their leadership, with calls for Google CEO Sundar Pichai to resign due to his failure to grasp AI properly and exploit it commercially.

Beyond the headlines, to reduce the risk of bias, there are a number of design factors that we must build into our AI solutions and into the way we operate our businesses. A few examples include:

Training Data Diversity: Training data for AI models must represent diverse demographics.

Example: An AI hiring tool should be trained on resumes from candidates of various ages, genders, and ethnic backgrounds to prevent bias in candidate selection.

Algorithm Transparency: Make the AI decision-making process understandable.

Example: A credit scoring AI should clearly explain the factors influencing credit score calculations.

Stakeholder Involvement: Include diverse groups in AI development.

Example: Involve community representatives in developing AI policies for public surveillance to ensure community values are reflected.

Legal Compliance: Ensure AI applications follow anti-discrimination laws.

Example: Regularly audit AI job advertisement placements to prevent gender or racial bias violating employment laws.

Development Opportunities

Global growth potential

Amongst all the restrictive doom and dangerous gloom of AI, we must also recognise the good that comes from national and international collaborative GERB efforts that will allow companies to flourish with minimal risk. The numbers of AI startups are rapidly increasing and we can expect that after the transition period for mass commercial AI adoption, significant gains will be realised.

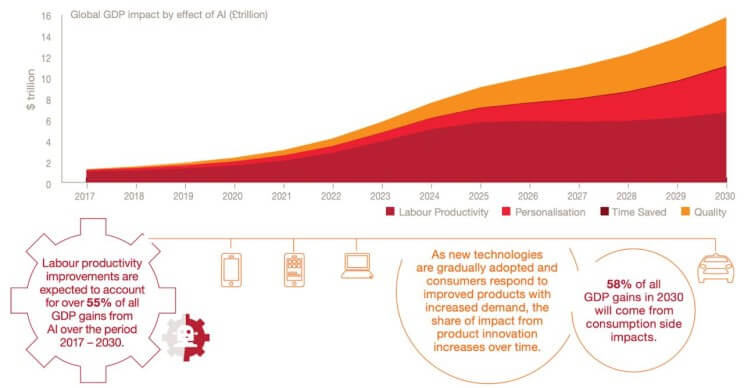

Price Waterhouse Cooper (PWC) reports the global artificial intelligence market size was valued at $136.55 billion in 2022, and is expected to grow significantly in the coming years backed by mounting AI investment in tech, digital disruption and competitive advantage. They project an expansion of compound annual growth rate (CAGR) of 37.3% from 2023 to 2030, reaching $1,811.8 billion by 2030.

These numbers also look set to translate into boosted GDP figures, as shown in the figure, above, from a PWC report reviewed by Forbes online.

Grant opportunities

The UK and Europe are leaders in providing opportunities including non-dilutive grants to promote innovation for companies who may otherwise struggle to access funding. Examples of this are the excellent Innovate UK and the EU’s Horizon Europe funding programmes.

While the competition for grants is fierce, if you have a truly truly-state-of-art idea and you manage to land a grant from either body, you will have already gone through valuable scrutiny and diligence. This will help to ensure your AI development project includes the correct GERB focussed safety measures as part of the plan prior to it being let loose on your clients and end users. Regular reporting within the project delivery with advice and support also helps to keep things on track.

The bid competition topics are diverse to ensure various industries are covered. With AI being the hottest topic currently, there are always options to bid on throughout the year. At the time of writing in March 2024, for example, Innovate UK, included in the broader selection of funds offered though UK Research and Innovation (UKRI), has 36 funding opportunities live or soon to be opened.

Conclusions

The weight of global effort behind ensuring we achieve a safe AI world to operate in needs to be significantly ramped up.

Governments need to increase funding to put regulations in place and design them so they have the longevity to cope with the rapid change that’s inevitable.

Companies need to abide by these new regulations and proactively invest in and apply context specific ones of their own to avoid falling foul of ICO styled government penalties as well as public perception and sentiment impacting their market cap.

No doubt, punitive measures would be decried as draconian and anti-business by those in the midst of exciting and profitable development, driven by the pressure of high value investments. This perceived collateral damage is something we have to suck up though to avoid the most potentially harmful effects of AI.

Companies need to think deeply about how to protect data from theft, plagiarism and misuse as well as the provenance of factual material being disseminated globally. The potential for cryptographically watermarking items is potenially a way forward here and would have a huge scope to benefit multiple industries.

The good news about getting GERB right is it will reduce your operating risk and allow you to create a higher quality products that stand up to global scrutiny – Let’s make the stick more carrot.

Any company involved prior to maturing of the AI market runs the risks associated with being an early adopter, even for the digital behemoths, as shown with Google’s recent woes. We must resist the temptation to cut corners to go to market first. Maybe think a little bit more Apple – don’t be the first, be the best.

Doing things properly in the GERB sense means adopting a safety-first approach to AI solution design. Your Risk Register must be front and centre of your business collateral and all business initiatives must be considered carefully, up front, to ensure they don’t have an adverse impact outside of their obvious remit – time for the lateral thinkers to shine.

Finally, the drum I’m beating once again is to keep hold of your subject matter experts. These are the people who will not only work with operational AI expertise to orchestrate the best outcomes when integrating AI, they will also be the legends who spot the cross product and cross business function risks that could make you fall foul of GERB areas at a great cost.

If you have found this useful and would like to know how I might help you integrate AI into your company, please reach out to me.